Cloudflare Faces 6-Hour Global Outage from BYOIP Config Error

Cloudflare suffered a major six-hour outage on February 20, 2026. It started at 17:48 UTC and hit customers using Bring Your Own IP services hard. BGP routes got pulled by mistake, blocking access to many sites and apps worldwide.

The issue came from a routine internal update. No cyberattack caused it. About 25% of BYOIP prefixes lost routing. Users saw timeouts and HTTP 403 errors on services like the 1.1.1.1 DNS resolver.

Access content across the globe at the highest speed rate.

70% of our readers choose Private Internet Access

70% of our readers choose ExpressVPN

Browse the web from multiple devices with industry-standard security protocols.

Faster dedicated servers for specific actions (currently at summer discounts)

Cloudflare’s cleanup task went wrong. An API call used an empty pending_delete flag. Servers read it as “delete everything matching.” Over 1,100 prefixes vanished before engineers stopped it.

Traffic entered BGP path hunting mode. Connections looped looking for lost routes until they failed. Core products like CDN, Spectrum, and Magic Transit all took hits.

Affected Services

Multiple Cloudflare offerings stopped working properly.

| Service | Impact Details |

|---|---|

| Core CDN | Site traffic timed out completely |

| Spectrum | Proxy apps failed to pass data |

| Dedicated Egress | Outbound traffic blocked |

| Magic Transit | Protected apps unreachable |

Recovery varied by customer. Some fixed routes via dashboard. Others needed full manual restore of bindings across edge machines.

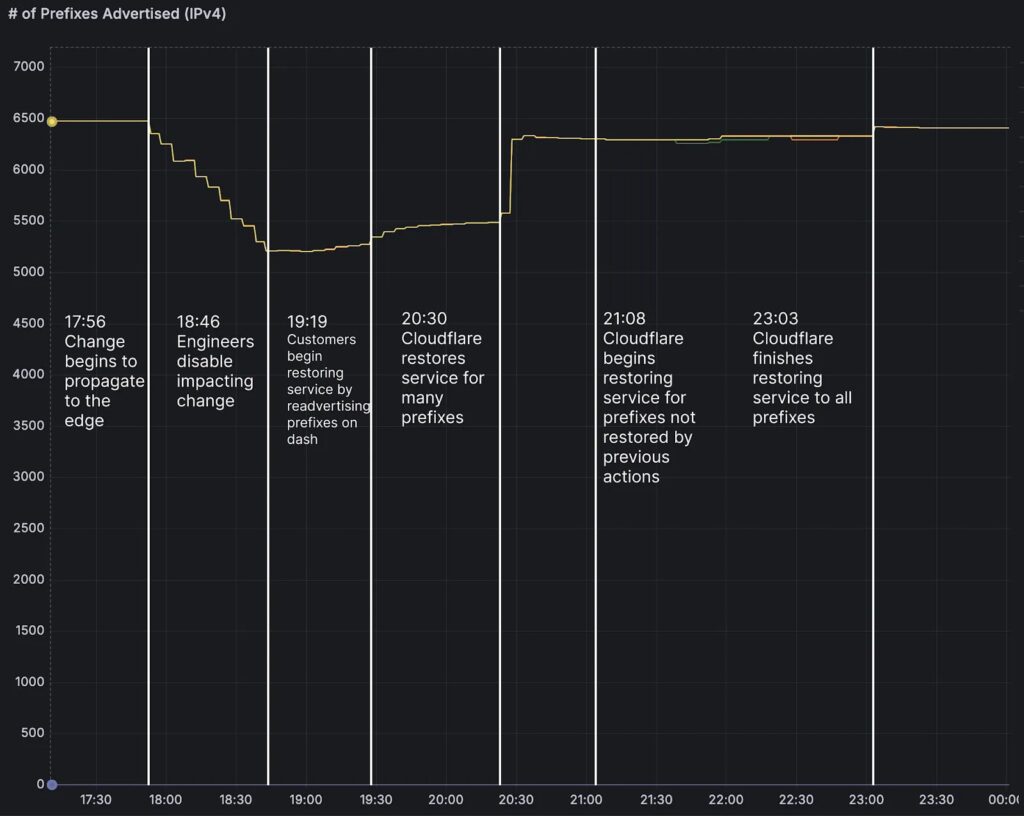

Timeline Breakdown

Key events unfolded over hours.

- 17:56 UTC: Bad task runs, prefixes withdraw

- 18:46 UTC: Engineers spot issue, halt process

- 19:19 UTC: Dashboard self-fix option live

- 23:03 UTC: Full global config restore done

The outage broke Cloudflare’s uptime promise. It hit 25% of BYOIP users globally.

Root Cause Analysis

A sub-task for “Code Orange: Fail Small” aimed to automate prefix cleanup. The API query passed pending_delete=”” instead of a proper filter. Servers queued all matches for deletion.

Bindings to services got wiped too. BGP hunting followed as routes vanished from the global table.

Cloudflare plans fixes now. They include API schema standards, BGP deletion circuit breakers, and config snapshots.

Official Response

Cloudflare’s incident report states: “A routine cleanup task misfired due to empty API flag.”

Engineers pushed global updates to recover. Dashboard toggles helped some fast.

Remediation Plans

Cloudflare accelerates changes.

- Standardize all API flags and values

- Add rate limits on BGP withdrawals

- Snapshot configs before production tasks

- Test cleanup logic in staging first

FAQ

Internal API bug deleted 1,100+ BYOIP prefixes during cleanup.

Six hours, seven minutes from 17:48 UTC on Feb 20.

CDN, Spectrum, Magic Transit, Dedicated Egress.

No. Pure config error, confirmed by Cloudflare.

Code Orange upgrades roll out soon with safeguards.

Read our disclosure page to find out how can you help VPNCentral sustain the editorial team Read more

User forum

0 messages