Google Says Hackers Are Abusing Gemini AI for Every Stage of Cyberattacks

State-backed and criminal hackers are increasingly using Google’s Gemini AI model to assist with cyberattack campaigns. The misuse covers many parts of an attack lifecycle, from reconnaissance to post-compromise actions, Google’s Threat Intelligence Group (GTIG) warns in a new report.

According to Google, adversaries from China, Iran, Russia, and North Korea have used Gemini AI to support activities such as target profiling, phishing lure creation, coding assistance, vulnerability research, and troubleshooting. These actors are also integrating AI into malware development and other harmful tools.

Access content across the globe at the highest speed rate.

70% of our readers choose Private Internet Access

70% of our readers choose ExpressVPN

Browse the web from multiple devices with industry-standard security protocols.

Faster dedicated servers for specific actions (currently at summer discounts)

“Threat actors are abusing Gemini AI to support campaigns from reconnaissance to command and control development and data exfiltration,” Google says in the report.

How Hackers Use Gemini AI

AI tools like Gemini offer powerful capabilities. But threat actors are taking advantage of these features to improve and accelerate cyberattack operations.

| Use Case | Description |

|---|---|

| Reconnaissance | Gathering information about targets and vulnerabilities. |

| Phishing Lures | Crafting convincing messages and fake sites. |

| Coding Support | Fixing bugs, testing scripts, or generating code. |

| Malware Development | Adding AI-assisted features to malware payloads. |

| Troubleshooting | Using AI to refine attack tools or tactics. |

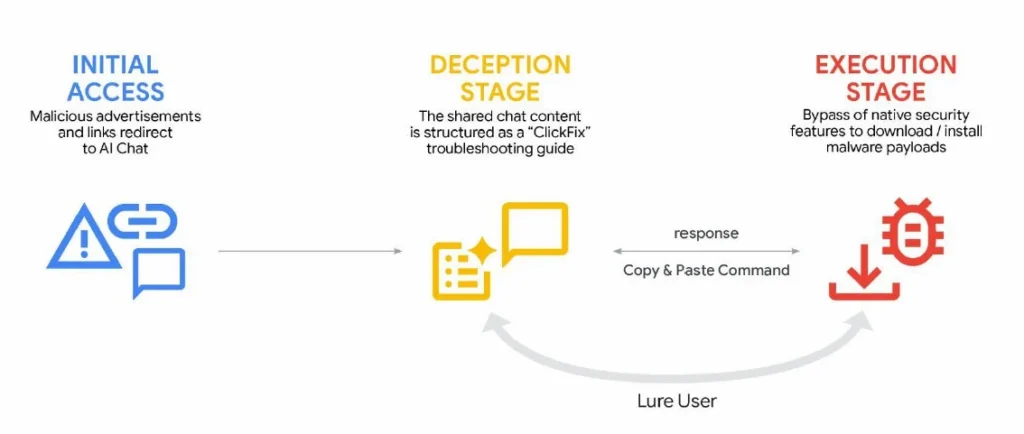

Researchers say this misuse is not limited to simple research tasks. In some cases, malware families now integrate AI to generate code dynamically or obfuscate their operations.

Government-Linked Hackers and AI Abuse

Google’s analysis shows that state-backed groups have experimented with Gemini AI to aid their work:

- China-linked actors used AI to automate vulnerability analysis, test exploit techniques, and assist with offensive planning.

- Iran-linked groups leveraged AI for social engineering and to speed up creation of custom malicious tools.

- North Korean and Russian groups used AI to support various coding and research tasks.

Google Threat Intelligence Group chief analyst John Hultquist said the use of Gemini by sanctioned groups highlights a trend toward adopting AI for “semi-autonomous offensive operations.”

Malware Families Enhanced by AI

Several malicious tools and frameworks have shown signs of being developed with the help of AI models like Gemini:

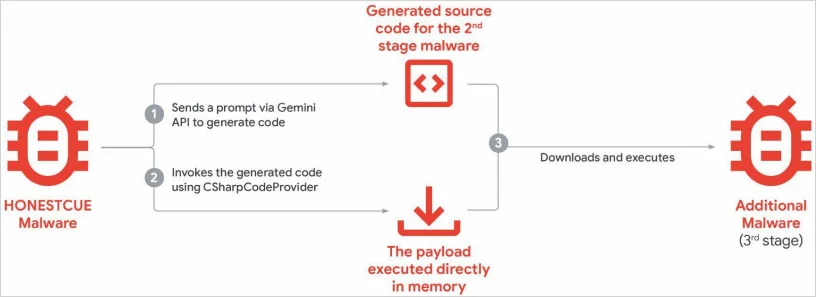

- HonestCue is a proof-of-concept malware that uses the Gemini API to generate C# code for second-stage payloads and execute them in memory.

- CoinBait is a phishing kit built as a fake cryptocurrency platform. Artifacts in the code suggest AI-assisted development.

These tools indicate an evolving level of sophistication among malicious developers, who can use AI to rapidly prototype and refine components of their attacks.

AI Model Extraction and Knowledge Distillation

Google also warns of model extraction and knowledge distillation attacks. These occur when attackers use legitimate API access to repeatedly query and replicate the decision-making of a model like Gemini, potentially creating a copycat AI.

“Model extraction and subsequent knowledge distillation enable an attacker to accelerate AI model development quickly and at a significantly lower cost,” GTIG researchers say.

Such tactics do not directly endanger users’ data, but they pose a commercial and intellectual property risk to AI developers and the broader ecosystem.

Other AI Security Concerns Linked to Gemini

Beyond misuse in cyberattacks, independent research has exposed additional AI security risks tied to Gemini:

- Academics have identified promptware attacks that can manipulate AI output through indirect prompt injection, including via calendar invites or shared documents. These could be used to perform harmful operations such as phishing, data exfiltration, or device control.

- According to Wikipedia Past vulnerabilities showed how hidden directives in prompts might influence Gemini’s long-term memory, though these risks were rated low by developers.

What Google Is Doing to Combat Misuse

To counter these threats, Google says it has taken multiple steps:

- Disabled accounts and infrastructure tied to abusive activity.

- Deployed targeted defenses in Gemini’s classification systems to make misuse harder.

- Continues to test and improve security measures and safety guardrails in its AI platforms.

The company stresses that ongoing vigilance and collaboration across the cybersecurity community are necessary to protect AI technology and users.

Frequently Asked Questions (FAQ)

A: GTIG says these abuses do not directly target user data, but the threat landscape is evolving and risks remain if AI is integrated into services that access personal information.

A: Gemini’s model is designed with guardrails, but adversaries have used social engineering prompts to bypass some protections. Ongoing security updates aim to reduce this risk.

A: Yes. AI can tailor language and messages based on context, making phishing content more tailored and harder to detect.

A: Yes. Misuse of generative AI models, including for malware aides and code generation, is a broader industry concern.

A: Enterprises should combine AI safety practices with strong cybersecurity protocols, monitor AI usage logs, and regularly update tools to incorporate the latest security fixes.

Read our disclosure page to find out how can you help VPNCentral sustain the editorial team Read more

User forum

0 messages